Non-negative Poisson Loss Reconstruction (APGM)#

This example demonstrates the use of class pgm.PGMStepSize to solve the non-negative reconstruction problem with Poisson negative log likelihood loss

where \(A\) is the forward operator, \(\mathbf{y}\) is the measurement, \(\mathbf{x}\) is the signal reconstruction, and \(I(\mathbf{x}^{(0)} \geq 0)\) is the non-negative indicator.

This example also demonstrates the application of numpy.BlockArray, functional.SeparableFunctional, and functional.ZeroFunctional to implement the forward operator \(A(\mathbf{x}) = A_0(\mathbf{x}^{(0)}) + A_1(\mathbf{x}^{(1)})\) and the selective non-negativity constraint that only applies to \(\mathbf{x}^{(0)}\).

[1]:

import matplotlib.gridspec as gridspec

import matplotlib.pyplot as plt

import scico.numpy as snp

import scico.random

from scico import functional, loss, plot

from scico.numpy import BlockArray

from scico.operator import Operator

from scico.optimize.pgm import (

AcceleratedPGM,

AdaptiveBBStepSize,

BBStepSize,

LineSearchStepSize,

RobustLineSearchStepSize,

)

from scico.typing import Shape

from scico.util import device_info

from scipy.linalg import dft

plot.config_notebook_plotting()

Construct a dictionary, a reference random reconstruction, and a test measurement signal consisting of the synthesis of the reference reconstruction.

[2]:

m = 1024 # signal size

n = 8 # dictionary size

n0 = 2

n1 = n - n0

# Create dictionary with bump-like features.

D = ((snp.real(dft(m))[1 : n + 1, :m]) ** 12).T

D0 = D[:, :n0]

D1 = D[:, n0:]

# Define composed operator.

class ForwardOperator(Operator):

"""Toy problem non-linear forward operator with different treatment

of x[0] and x[1].

Attributes:

D0: Matrix multiplying x[0].

D1: Matrix multiplying x[1].

"""

def __init__(self, input_shape: Shape, D0, D1, jit: bool = True):

self.D0 = D0

self.D1 = D1

output_shape = (D0.shape[0],)

super().__init__(

input_shape=input_shape,

input_dtype=snp.complex64,

output_dtype=snp.complex64,

output_shape=output_shape,

jit=jit,

)

def _eval(self, x: BlockArray) -> BlockArray:

return 10 * snp.exp(-D0 @ x[0]) + 5 * snp.exp(-D1 @ x[1])

x_gt, key = scico.random.uniform(((n0,), (n1,)), seed=12345) # true coefficients

A = ForwardOperator(x_gt.shape, D0, D1)

lam = A(x_gt)

y, key = scico.random.poisson(lam, shape=lam.shape, key=key) # synthetic signal

Set up the loss function and the regularization.

[3]:

f = loss.PoissonLoss(y=y, A=A)

g0 = functional.NonNegativeIndicator()

g1 = functional.ZeroFunctional()

g = functional.SeparableFunctional([g0, g1])

Define common setup: maximum of iterations and initial estimate of solution.

[4]:

maxiter = 50

x0, key = scico.random.uniform(((n0,), (n1,)), key=key)

Define plotting functionality.

[5]:

def plot_results(hist, str_ss, L0, xsol, xgt, Aop):

# Plot signal, coefficients and convergence statistics.

fig = plot.figure(

figsize=(12, 6),

tight_layout=True,

)

gs = gridspec.GridSpec(nrows=2, ncols=3)

fig.suptitle(

"Results for PGM Solver and " + str_ss + r" ($L_0$: " + "{:4.2f}".format(L0) + ")",

fontsize=16,

)

ax0 = fig.add_subplot(gs[0, 0])

plot.plot(

hist.Objective,

ptyp="semilogy",

title="Objective",

xlbl="Iteration",

fig=fig,

ax=ax0,

)

ax1 = fig.add_subplot(gs[0, 1])

plot.plot(

hist.Residual,

ptyp="semilogy",

title="Residual",

xlbl="Iteration",

fig=fig,

ax=ax1,

)

ax2 = fig.add_subplot(gs[0, 2])

plot.plot(

hist.L,

ptyp="semilogy",

title="L",

xlbl="Iteration",

fig=fig,

ax=ax2,

)

ax3 = fig.add_subplot(gs[1, 0])

plt.stem(snp.concatenate((xgt[0], xgt[1])), linefmt="C1-", markerfmt="C1o", basefmt="C1-")

plt.stem(snp.concatenate((xsol[0], xsol[1])), linefmt="C2-", markerfmt="C2x", basefmt="C1-")

plt.legend(["Ground Truth", "Recovered"])

plt.xlabel("Index")

plt.title("Coefficients")

ax4 = fig.add_subplot(gs[1, 1:])

plot.plot(

snp.vstack((y, Aop(xgt), Aop(xsol))).T,

title="Fit",

xlbl="Index",

lgnd=("y", "A(x_gt)", "A(x)"),

fig=fig,

ax=ax4,

)

fig.show()

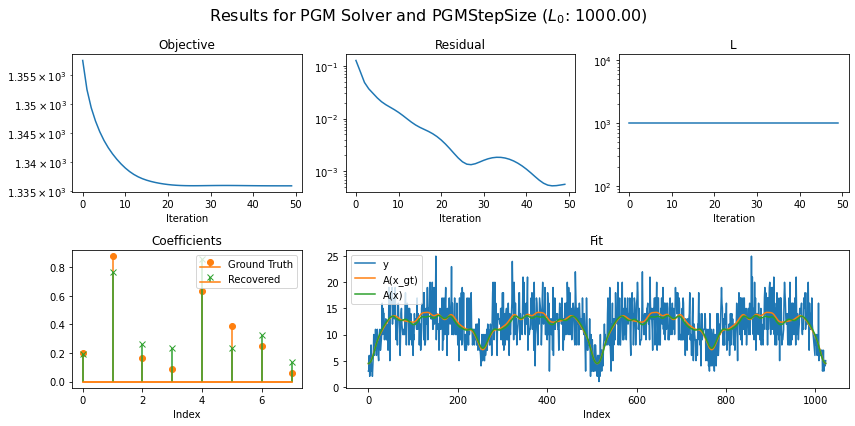

Use default PGMStepSize object, set L0 based on norm of Forward operator and set up AcceleratedPGM solver object. Run the solver and plot the recontructed signal and convergence statistics.

[6]:

L0 = 1e3

str_L0 = "(Specifically chosen so that convergence occurs)"

solver = AcceleratedPGM(

f=f,

g=g,

L0=L0,

x0=x0,

maxiter=maxiter,

itstat_options={"display": True, "period": 10},

)

str_ss = type(solver.step_size).__name__

print(f"Solving on {device_info()}\n")

print("============================================================")

print("Running solver with step size of class: ", str_ss)

print("L0 " + str_L0 + ": ", L0, "\n")

x = solver.solve() # Run the solver.

hist = solver.itstat_object.history(transpose=True)

plot_results(hist, str_ss, L0, x, x_gt, A)

Solving on GPU (NVIDIA GeForce RTX 2080 Ti)

============================================================

Running solver with step size of class: PGMStepSize

L0 (Specifically chosen so that convergence occurs): 1000.0

Iter Time Objective L Residual

-----------------------------------------------

0 1.30e+00 1.358e+03 1.000e+03 1.253e-01

10 2.83e+00 1.339e+03 1.000e+03 1.304e-02

20 3.07e+00 1.336e+03 1.000e+03 3.918e-03

30 3.31e+00 1.336e+03 1.000e+03 1.620e-03

40 3.54e+00 1.336e+03 1.000e+03 1.098e-03

49 3.75e+00 1.336e+03 1.000e+03 5.685e-04

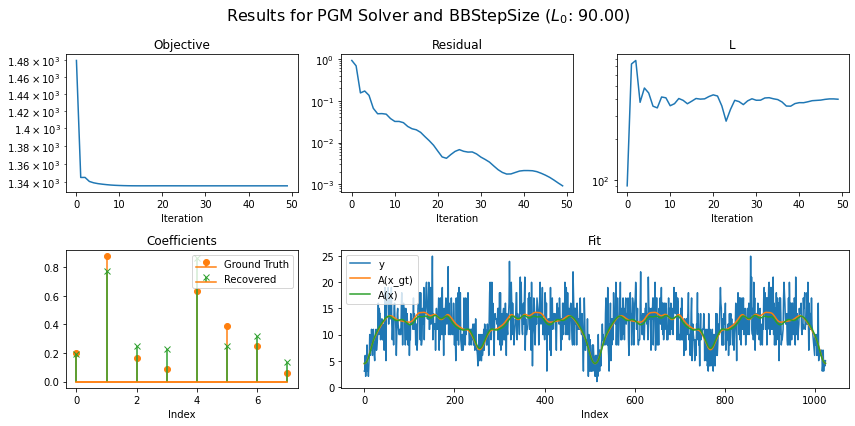

Use BBStepSize object, set L0 with arbitary initial value and set up AcceleratedPGM solver object. Run the solver and plot the recontructed signal and convergence statistics.

[7]:

L0 = 90.0 # initial reciprocal of gradient descent step size

str_L0 = "(Arbitrary Initialization)"

solver = AcceleratedPGM(

f=f,

g=g,

L0=L0,

x0=x0,

maxiter=maxiter,

itstat_options={"display": True, "period": 10},

step_size=BBStepSize(),

)

str_ss = type(solver.step_size).__name__

print("===================================================")

print("Running solver with step size of class: ", str_ss)

print("L0 " + str_L0 + ": ", L0, "\n")

x = solver.solve() # Run the solver.

hist = solver.itstat_object.history(transpose=True)

plot_results(hist, str_ss, L0, x, x_gt, A)

===================================================

Running solver with step size of class: BBStepSize

L0 (Arbitrary Initialization): 90.0

Iter Time Objective L Residual

-----------------------------------------------

0 1.43e+00 1.479e+03 9.000e+01 9.151e-01

10 2.35e+00 1.336e+03 3.557e+02 3.194e-02

20 2.68e+00 1.336e+03 4.288e+02 6.221e-03

30 2.98e+00 1.336e+03 3.908e+02 4.487e-03

40 3.33e+00 1.336e+03 3.750e+02 2.139e-03

49 3.64e+00 1.336e+03 3.977e+02 9.227e-04

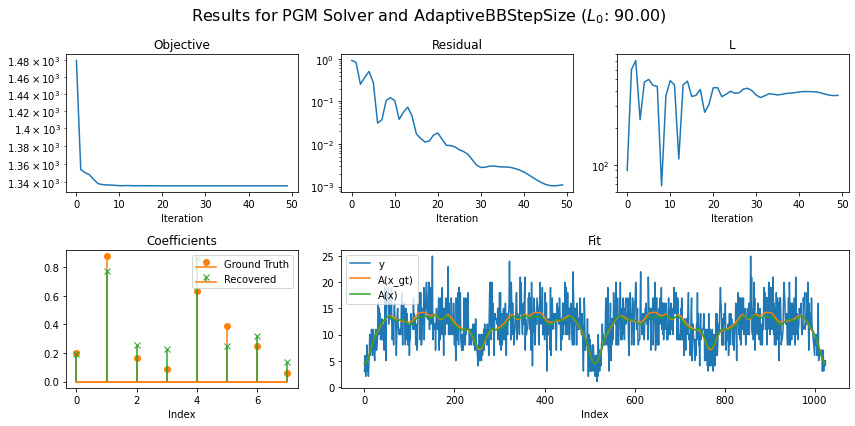

Use AdaptiveBBStepSize object, set L0 with arbitary initial value and set up AcceleratedPGM solver object. Run the solver and plot the recontructed signal and convergence statistics.

[8]:

L0 = 90.0 # initial reciprocal of gradient descent step size

str_L0 = "(Arbitrary Initialization)"

solver = AcceleratedPGM(

f=f,

g=g,

L0=L0,

x0=x0,

maxiter=maxiter,

itstat_options={"display": True, "period": 10},

step_size=AdaptiveBBStepSize(kappa=0.75),

)

str_ss = type(solver.step_size).__name__

print("===========================================================")

print("Running solver with step size of class: ", str_ss)

print("L0 " + str_L0 + ": ", L0, "\n")

x = solver.solve() # Run the solver.

hist = solver.itstat_object.history(transpose=True)

plot_results(hist, str_ss, L0, x, x_gt, A)

===========================================================

Running solver with step size of class: AdaptiveBBStepSize

L0 (Arbitrary Initialization): 90.0

Iter Time Objective L Residual

-----------------------------------------------

0 1.59e-01 1.479e+03 9.000e+01 9.151e-01

10 6.54e-01 1.336e+03 4.879e+02 1.040e-01

20 9.37e-01 1.336e+03 4.255e+02 1.810e-02

30 1.28e+00 1.336e+03 3.710e+02 2.811e-03

40 1.66e+00 1.336e+03 3.941e+02 2.196e-03

49 2.00e+00 1.336e+03 3.691e+02 1.097e-03

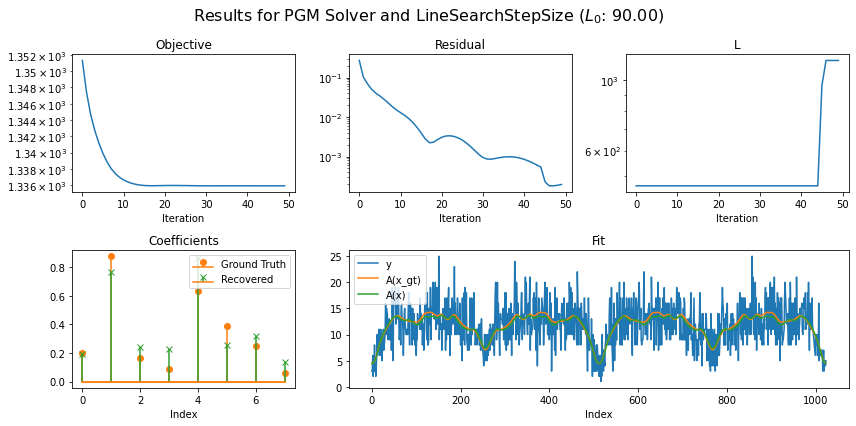

Use LineSearchStepSize object, set L0 with arbitary initial value and set up AcceleratedPGM solver object. Run the solver and plot the recontructed signal and convergence statistics.

[9]:

L0 = 90.0 # initial reciprocal of gradient descent step size

str_L0 = "(Arbitrary Initialization)"

solver = AcceleratedPGM(

f=f,

g=g,

L0=L0,

x0=x0,

maxiter=maxiter,

itstat_options={"display": True, "period": 10},

step_size=LineSearchStepSize(),

)

str_ss = type(solver.step_size).__name__

print("===========================================================")

print("Running solver with step size of class: ", str_ss)

print("L0 " + str_L0 + ": ", L0, "\n")

x = solver.solve() # Run the solver.

hist = solver.itstat_object.history(transpose=True)

plot_results(hist, str_ss, L0, x, x_gt, A)

===========================================================

Running solver with step size of class: LineSearchStepSize

L0 (Arbitrary Initialization): 90.0

Iter Time Objective L Residual

-----------------------------------------------

0 3.59e-01 1.351e+03 4.644e+02 2.699e-01

10 6.89e-01 1.337e+03 4.644e+02 1.291e-02

20 1.03e+00 1.336e+03 4.644e+02 3.084e-03

30 1.37e+00 1.336e+03 4.644e+02 9.443e-04

40 1.69e+00 1.336e+03 4.644e+02 8.497e-04

49 2.04e+00 1.336e+03 1.156e+03 1.971e-04

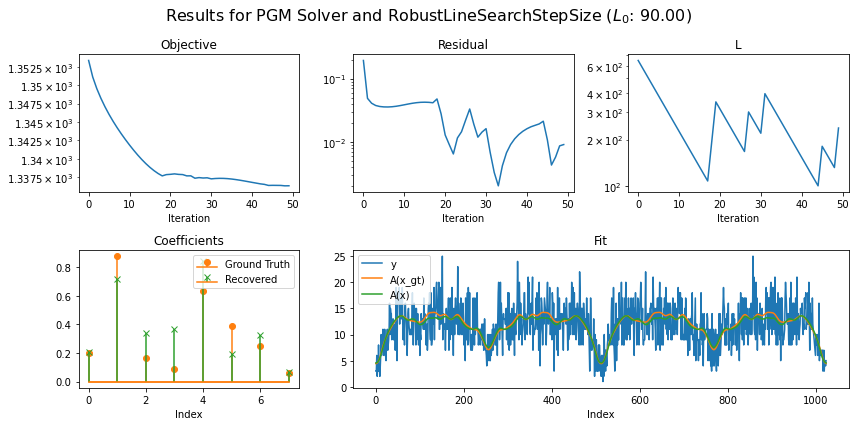

Use RobustLineSearchStepSize object, set L0 with arbitary initial value and set up AcceleratedPGM solver object. Run the solver and plot the recontructed signal and convergence statistics.

[10]:

L0 = 90.0 # initial reciprocal of gradient descent step size

str_L0 = "(Arbitrary Initialization)"

solver = AcceleratedPGM(

f=f,

g=g,

L0=L0,

x0=x0,

maxiter=maxiter,

itstat_options={"display": True, "period": 10},

step_size=RobustLineSearchStepSize(),

)

str_ss = type(solver.step_size).__name__

print("=================================================================")

print("Running solver with step size of class: ", str_ss)

print("L0 " + str_L0 + ": ", L0, "\n")

x = solver.solve() # run the solver

hist = solver.itstat_object.history(transpose=True)

plot_results(hist, str_ss, L0, x, x_gt, A)

=================================================================

Running solver with step size of class: RobustLineSearchStepSize

L0 (Arbitrary Initialization): 90.0

Iter Time Objective L Residual

-----------------------------------------------

0 3.74e-01 1.353e+03 6.480e+02 1.934e-01

10 6.83e-01 1.342e+03 2.259e+02 3.863e-02

20 9.99e-01 1.338e+03 3.151e+02 1.290e-02

30 1.31e+00 1.337e+03 2.198e+02 1.624e-02

40 1.61e+00 1.337e+03 1.532e+02 1.618e-02

49 1.91e+00 1.336e+03 2.375e+02 9.108e-03